The selected nonstandard methods are robust to many uncontrollable variations in acoustic environment and individual subjects, and are thus well suited to telemonitoring applications.Boersma, P.

In conclusion, we find that nonstandard methods in combination with traditional harmonics-to-noise ratios are best able to separate healthy from PD subjects. We then selected ten highly uncorrelated measures, and an exhaustive search of all possible combinations of these measures finds four that in combination lead to overall correct classification performance of 91.4%, using a kernel support vector machine. We collected sustained phonations from 31 people, 23 with PD. We introduce a new measure of dysphonia, pitch period entropy (PPE), which is robust to many uncontrollable confounding effects including noisy acoustic environments and normal, healthy variations in voice frequency. This study demonstrates that data-driven methods-commonplace in studies of human neuroanatomy and functional connectivity-provide a powerful and efficient means for mapping functional representations in the brain.Ībstract: In this paper, we present an assessment of the practical value of existing traditional and nonstandard measures for discriminating healthy people from people with Parkinson's disease (PD) by detecting dysphonia. Our results suggest that most areas within the semantic system represent information about specific semantic domains, or groups of related concepts, and our atlas shows which domains are represented in each area. We then use a novel generative model to create a detailed semantic atlas. We show that the semantic system is organized into intricate patterns that seem to be consistent across individuals. Here we systematically map semantic selectivity across the cortex using voxel-wise modelling of functional MRI (fMRI) data collected while subjects listened to hours of narrative stories. However, little of the semantic system has been mapped comprehensively, and the semantic selectivity of most regions is unknown. In the enrollment mode, a speaker model is created with the aid of previously created background model in recognition mode, both the hypothesized model and the background model are matched and background score is used in normalizing the raw score.Ībstract: The meaning of language is represented in regions of the cerebral cortex collectively known as the 'semantic system'. Figure 1: Components of a typical automatic speaker recognition system.

Figure 12: Example of detection error trade-off (DET) plot presenting various subsystems and a combined system using score-level fusion.Variablelength utterances are mapped into fixed-dimensional supervectors, followed by intersession variability compensation and SVM training. Figure 11: The concept of modern sequence kernel SVM.SVMs have excellent generalization performance. A maximummargin hyperplane that separates the positive (+1) and negative (-1) training examples is found by an optimization process. Figure 9: Principle of support vector machine (SVM).Speech frame (top), linear prediction (LP) residual (middle), and glottal flow estimated via inverse filtering (bottom). Numeric compatibility with future versions is ensured by means of unit tests. It supports on-line incremental processing for all implemented features as well as off-line and batch processing.

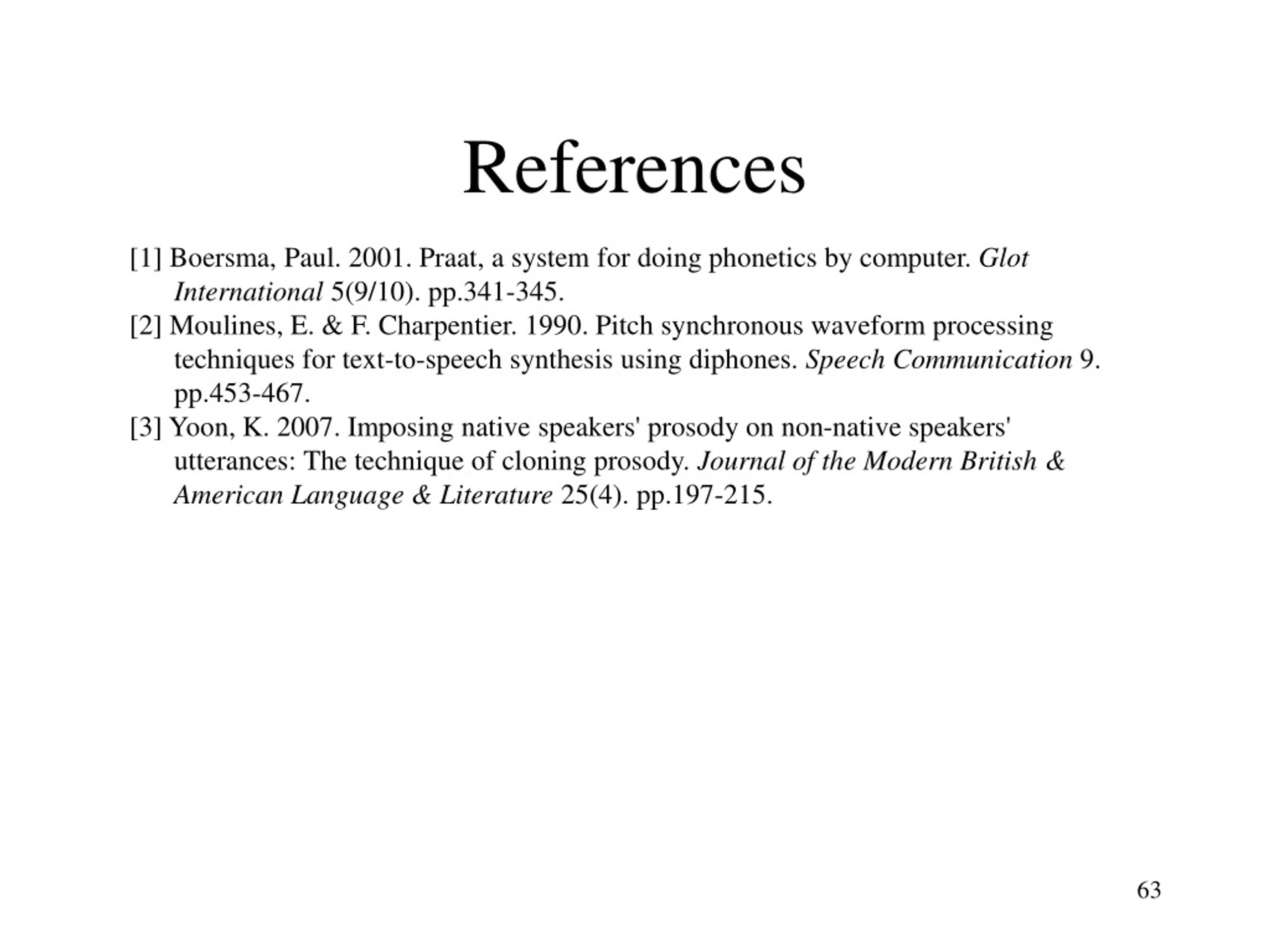

#Praat a system for doing phonetics by computer windows

It is fast, runs on Unix and Windows platforms, and has a modular, component based architecture which makes extensions via plug-ins easy. openSMILE is implemented in C++ with no third-party dependencies for the core functionality. Delta regression and various statistical functionals can be applied to the low-level descriptors.

Audio low-level descriptors such as CHROMA and CENS features, loudness, Mel-frequency cepstral coefficients, perceptual linear predictive cepstral coefficients, linear predictive coefficients, line spectral frequencies, fundamental frequency, and formant frequencies are supported. Abstract: We introduce the openSMILE feature extraction toolkit, which unites feature extraction algorithms from the speech processing and the Music Information Retrieval communities.

0 kommentar(er)

0 kommentar(er)